A Cognitive Bias Detector Built in 24 Hours for Figma's Make-a-Thon

Role

UX/UI Designer

Project Type

Vibe Coding

Duration:

24 Hours

The idea for Cognito started while I was writing a blog on cognitive biases in UX. I wanted to create a resource that made it easier for designers to spot these hidden pitfalls. Around the same time, Figma and Contra announced their Make-a-Thon, a contest encouraging designers to experiment with their new Vibe Coding AI tool. I took this as an opportunity to explore how Vibe Coding could fit into my workflow and challenged myself to integrate AI into my design workflow.

Although the Make-a-Thon spanned a full week, I only had about 24 hours to design and build a working prototype due to personal time constraints. This meant focusing on speed and feasibility rather than polish. The bigger challenge came from the tools themselves: today’s LLMs are inherently non-deterministic, so careful contextual prompting was critical to get consistent results. On top of that, reliably identifying cognitive biases would ultimately require dedicated model training, something outside the scope of Vibe Coding. These constraints shaped Cognito into more of a proof-of-concept than a finished product.

The goal was to create an minimum viable product (MVP) as a proof of concept and to explore how vibe coding can be used to help me rapidly prototype.

With this project, I wanted to explore how vibe coding can shorten the design process. Instead of going through the traditional design cycle I shortened it to jump into rapid protoyping and iteration.

Between iterations of prompting, I analyzed what could be improved and also occasionally provided screenshots to ChatGPT and prompted it to give me critqiues as well.

Prompting ChatGPT for Critques

The first iterations provided a great starting point for structure, however it was very bare bones and lacked colors and functionality.

First prototype based on vibe coding.

The first iterations provided a great starting point for structure, however it was very bare bones and lacked colors and functionality. It also introduced elements that weren't needed like a sign-in button.

Using ChatGPT to analyze and critique the designs, it actually provided useful suggestions like:

Providing rotating sample scenarios

Better CTA hierarchy

Lack of motion

It also identify certain things that didn't really apply, like how the placeholder is low contrast, but this is often done on purpose to indicate placeholder text. With the next iterations I took the idea of providing rotating sample scenarios and improved on it by pre-filling a sample scenario and adding a random button to generate different scenarios to test.

Analysis results screen.

Analysis results screen.

Thanks to rapid prototyping, I was able to quickly make changes and fix issues like colors, layout, and even add features like identifying specific sections of text that trigger a bias or UX violation. Although this was not always accurate, it provided a framework for how it could work.

In addition, I added a page that outlines various biases and ux laws as well as common examples to help users learn about biases and UX principles.

Thanks to rapid prototyping, I was able to quickly make changes and fix issues like colors, layout, and even add features like identifying specific sections of text that trigger a bias or UX violation. Although this was not always accurate, it provided a framework for how it could work.

In addition, I added a page that outlines various biases and ux laws as well as common examples to help users learn about biases and UX principles.

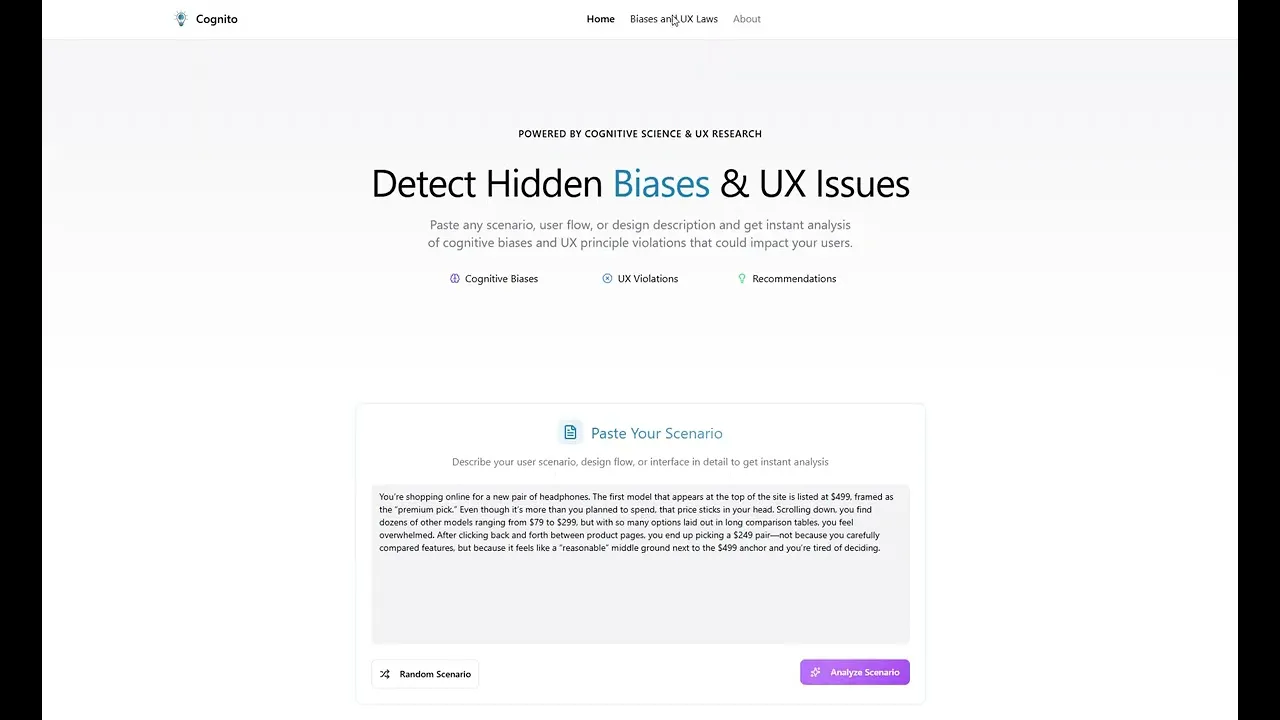

After roughly 70 iterations, all within 24 hours, I ended up with the following MVP. You can click the link below to visit and play around with it yourself.

This project taught me a lot about Vibe Coding and using AI in my design workflow and here are the key takeaways:

Vibe Coding with AI is great for rapid iterations, testing ideas, and creative exploration

Knowing how to code allows you to tweak small things rather than wasting prompts and reduces friction

There are limits to vibe coding algorithms, especially for things like pattern recognition. Model-based functionality would benefit from specialized model training. In its current state, it is not reliably accurate

LLMs like ChatGPT are great for ideation and brainstorming and often helps with sparking new ideas

Having generalized prompts creates very similar apps. Utilizing an existing design system or mockups/wireframes in Figma prior to prompting can help solve this.

Overall, this was a fantastic learning experience, and I was impressed by how quickly Vibe Coding enabled me to generate functional prototypes. Looking ahead, I can see how, with established wireframes and a solid design system in place, Vibe Coding could produce test-ready prototypes, accelerating both iteration and usability testing.